When Correlation Drops but Insight Rises

013 & 014 - Why Rookify’s Skill Tree “performed worse” after getting better at understanding chess.

Hey Folks, welcome back 👋🏿

If there’s one thing these past two weeks have taught me, it’s that sometimes progress feels like taking a step backward — not because you broke something, but because you finally started measuring it properly.

🧩 The Big Picture

Let’s rewind to Week 12 — that was the week where the skill’s tree ability to map chess improvements areas to Elo levels had a significant breakthrough.

I’d finally confirmed that Rookify’s Skill Tree could measure chess ability in a meaningful way, but I also realised something deeper:

Chess improvement doesn’t follow a straight line.

Some skills like spotting blunders or mastering basic tactics scale steadily with repetition.

However others such as positional understanding or endgame resilience evolve through breakthrough moments that don’t fit a linear trend at all.

And yet, my old validation system was built entirely on linear correlation. It could capture the slow, steady gains but completely missed the “aha” moments.

So for Weeks 13 and 14, I set out to rebuild skill tree’s analysis brain around that realisation by refactoring it to identify both steady growth and those unpredictable leaps in skill that chess players go through throughout their improvement journeys.

This led to four major engineering sprints each designed to make the Skill Tree smarter, more contextual, and ultimately, more human in how it understands improvement.

🧠 Sprints 1 to 4 - Teaching Rookify Context, Depth, and Nuance

The goal for this cycle was simple… to make Rookify think like a coach and not like a calculator.

Here’s what I’ve been implementing over the past two weeks:

1️⃣ Context Filters & Phase Detection

Until now, Rookify evaluated every move in isolation and treating all positions as if they were the same.

But chess is all about context. What’s good in an open Sicilian might be terrible in a cramped French structure.

So I built context filters to teach Rookify when and where a skill applies. Now it can distinguish between:

Game phases (opening, middlegame, endgame)

Position types (open, semi-open, closed)

Material states (ahead, equal, or behind)

This means tactical awareness is only evaluated in sharp positions, while positional play is scored in slow, closed ones.

In other words, the skill tree is now more selective in terms scoring relevant skills, depending on the type of position being analysed.

2️⃣ Skill Consolidation

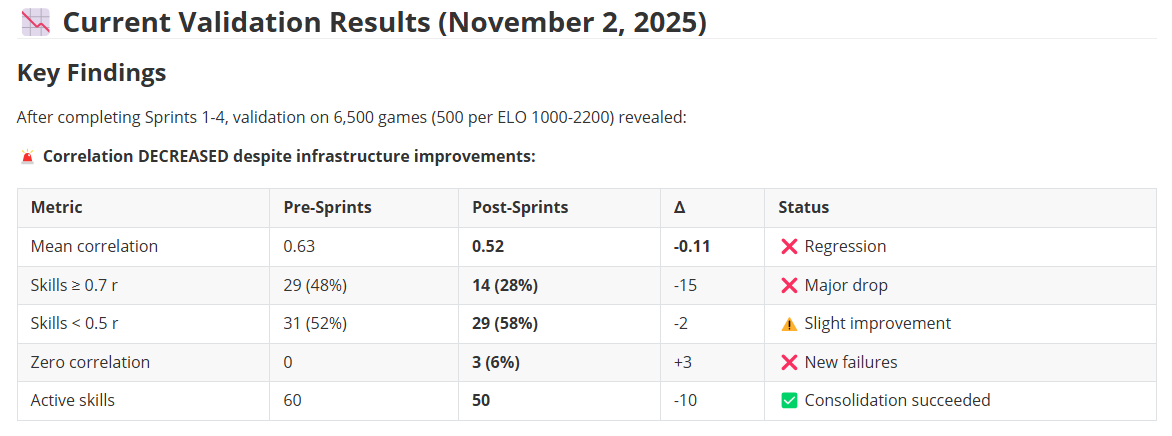

Next, I trimmed the Skill Tree by merging overlapping skills and removing redundancies.

The total count went from 60 → 50 sharper, cleaner skills.

This made the taxonomy more elegant and improved interpretability during analysis. It’s a bit like pruning a bonsai tree: less noise, more signal.

3️⃣ Non-Linear Growth Modelling

This sprint was directly born from that Week 12 revelation.

I implemented Spearman’s rank and piecewise regression models to detect skills that develop in leaps rather than steps.

Now, if a player suddenly “gets” positional play or starts handling endgames with newfound maturity, Rookify can actually see that shift even if it doesn’t fit a perfect linear slope.

The result is a more realistic model of player development, one that mirrors how real chess improvement actually happens.

4️⃣ Composite Features

Finally, I added a layer of meta-skills. These are higher-order indices built from multiple signals.

For example:

defensive_tenacity_indexcombines resourcefulness, time pressure handling, and blunder recovery.pawn_structure_planning_indexmerges mobility, doubled-pawn management, and central control.

These composites capture “how” a player thinks rather than just “what” they play — making the Skill Tree’s evaluations more holistic.

📊 Brain Got Smarter, but the Scores Got Worse

Once all these upgrades were in place, I re-ran the Elo validation on over 6,500 Lichess games spanning 1000–2200.

And the results?

Let’s just say my heart sank a little.

The mean correlation actually dropped from 0.63 → 0.52.

Twenty-nine skills fell back into weak correlation territory (r < 0.5).

On the surface, that looked like regression. But digging deeper revealed something else entirely.

Rookify wasn’t getting worse, it was getting more honest.

By becoming more context-aware, it stopped overfitting noise. It no longer rewarded skills in irrelevant scenarios.

The drop in correlation wasn’t a failure, it was the model calling me out for teaching it to be too nuanced for its own good.

🧠 The Post-Mortem

That said, some genuine issues surfaced during the investigation:

5 skills had inverted logic.

They were scoring in the wrong direction (e.g., rewarding all exchanges instead of favourable ones).3 skills had missing detection logic.

They existed on paper but never fired in practice.17 skills were too lenient.

Even beginners were maxing them out, killing their discriminative power.4 composite features were missing proper input signals.

So while the system was structurally sound, the logic layer — the part that interprets the data — clearly needed some more fine tuning.

🧩 Sprint 5, Debugging the Brain

Which brings us to what’s next — Sprint 5, a phase purely focused on teaching Rookify to think straight.

The plan:

1️⃣ Fix inverted formulas so skill progression correlates correctly with Elo.

2️⃣ Add missing detectors for nuanced patterns like triangulation and prophylaxis.

3️⃣ Tighten over-generous formulas to make strong play actually mean something.

4️⃣ Stabilise composite features by adding diagnostic logging and adaptive weighting.

If all goes well, this should push the mean correlation back toward 0.67+ (according to Claude), with at least 35 skills in the strong or moderate range.

💡 Lessons from Weeks 13–14

The main takeaway from these two weeks?

Making something smarter doesn’t automatically make it better, but it does make it more truthful.

Here are a few reflections that stuck with me:

Intelligence without calibration creates chaos.

Context filters can’t help if your logic is off.Linear metrics miss non-linear growth.

Real improvement looks messy — sudden, uneven, and full of detours.When your system gets honest, it hurts before it helps.

Losing correlation wasn’t failure; it was Rookify telling me where it was lying to me before.

🚀 What’s Next

Sprint 5 will carry me through the next two weeks. Once it’s complete, I’ll rerun validation on 10k+ games and see if these sprint 5 updates yield the positive results I’m hoping for.

I’ll admit that engineering this Skill Tree has been tougher than I envisioned and progress is slow but since it’s going to be the blueprint behind the AI coaching capabilities I have planned, it’s crucial that I get this right.

Thanks for reading,

– Anthon

Chief Vibes Officer @ Rookify

By the way, if you’re a chess player, I’d love to hear about your own improvement journey.

What’s been frustrating? What’s actually helped? And what kind of innovations do you wish existed in the chess world? If you’ve got 3–5 minutes to spare, please fill out this short survey. It would mean a lot and will directly help shape how Rookify evolves.

Thanks again